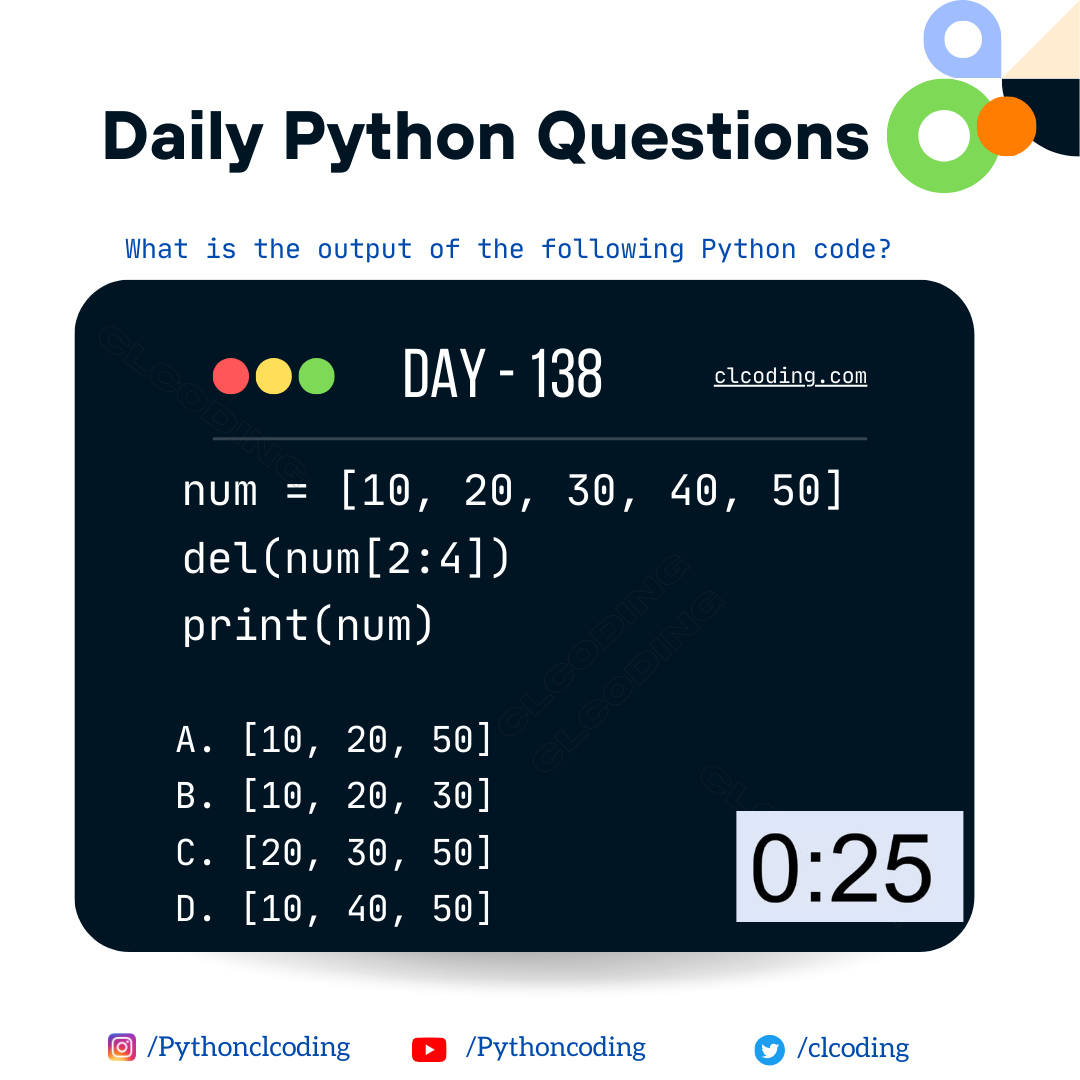

Let's break down the code step by step:

Function Definition:

def custom_function(b):

This line defines a function named custom_function that takes a parameter b.

Conditional Statements:

if b < 0:

return 20

This block checks if the value of b is less than 0. If it is, the function returns the integer 20.

if b == 0:

return 20.0

This block checks if the value of b is equal to 0. If it is, the function returns the floating-point number 20.0.

if b > 0:

return '20'

This block checks if the value of b is greater than 0. If it is, the function returns the string '20'.

Function Call:

print(custom_function(-3))

This line calls the custom_function with the argument -3 and prints the result.

Output Explanation:

The argument is -3, which is less than 0. Therefore, the first condition is true.

The function returns the integer 20.

The print statement then outputs 20.

So, the output of the provided code will be:

20

This is because the function returns the integer 20 when the input is less than 0.

.png)

.png)